i decided to make my G Suite “mdmtool” a bit more robust and release-worthy, instead of just proof of concept code.

(link: https://github.com/rickt/mdmtool) github.com/rickt/mdmtool.

enjoy!

i decided to make my G Suite “mdmtool” a bit more robust and release-worthy, instead of just proof of concept code.

(link: https://github.com/rickt/mdmtool) github.com/rickt/mdmtool.

enjoy!

Our desktop support & G Suite admin folks needed a simple, fast command-line tool to query basic info about our company’s mobile devices (which are all managed using G Suite’s built-in MDM).

So I wrote one.

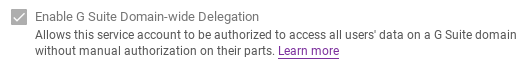

Since this tool needs to be run via command-line, it can’t use any interactive or browser-based authentication, so we need to use a service account for authentication.

Pre-requisites (GCP & G Suite):

client_id. Make a note of it!client_id of your service account for the necessary API scopes. In the Admin Console for your G Suite domain (Admin Console –> Security –> Advanced Settings –> Authentication –> Manage API Client Access), add your client_id in the “Client Name” box, and add https://www.googleapis.com/auth/admin.directory.device.mobile.readonlyin the “One or more API scopes” box

Pre-requisites (Go)

You’ll need to “go get” a few packages:

go get -u golang.org/x/oauth2/google

go get -u google.golang.org/api/admin/directory/v1

go get -u github.com/dustin/go-humanize

Pre-requisites (Environment Variables)

Because it’s never good to store runtime configuration within code, you’ll notice that the code references several environment variables. Setup them up to suit your preference but something like this will do:

export GSUITE_COMPANYID="A01234567" export SVC_ACCOUNT_CREDS_JSON="/home/rickt/dev/adminsdk/golang/creds.json export GSUITE_ADMINUSER="[email protected]"

And finally, the code

Gist URL: https://gist.github.com/rickt/199ca2be87522496e83de77bd5cd7db2

i wanted to try out the automatic loading of CSV data into Bigquery, specifically using a Cloud Function that would automatically run whenever a new CSV file was uploaded into a Google Cloud Storage bucket.

it worked like a champ. here’s what i did to PoC:

$ head -3 testdata.csv id,first_name,last_name,email,gender,ip_address 1,Andres,Berthot,[email protected],Male,104.204.241.0 2,Iris,Zwicker,[email protected],Female,61.87.224.4

$ gsutil mb gs://csvtestbucket

$ pip3 install google-cloud-bigquery --upgrade

$ bq mk --dataset rickts-dev-project:csvtestdataset

$ bq mk -t csvtestdataset.csvtable \ id:INTEGER,first_name:STRING,last_name:STRING,email:STRING,gender:STRING,ip_address:STRING

BUCKET: csvtestbucket DATASET: csvtestdataset TABLE: csvtable VERSION: v14

google-cloud google-cloud-bigquery

*csv *yaml

$ ls env.yaml main.py requirements.txt testdata.csv

$ gcloud beta functions deploy csv_loader \ --runtime=python37 \ --trigger-resource=gs://csvtestbucket \ --trigger-event=google.storage.object.finalize \ --entry-point=csv_loader \ --env-vars-file=env.yaml

$ gsutil cp testdata.csv gs://csvtestbucket/ Copying file://testdata.csv [Content-Type=text/csv]... - [1 files][ 60.4 KiB/ 60.4 KiB] Operation completed over 1 objects/60.4 KiB.

$ gcloud functions logs read [ ... snipped for brevity ... ] D csv_loader 274732139359754 2018-10-22 20:48:27.852 Function execution started I csv_loader 274732139359754 2018-10-22 20:48:28.492 Starting job 9ca2f39c-539f-454d-aa8e-3299bc9f7287 I csv_loader 274732139359754 2018-10-22 20:48:28.492 Function=csv_loader, Version=v14 I csv_loader 274732139359754 2018-10-22 20:48:28.492 File: testdata2.csv I csv_loader 274732139359754 2018-10-22 20:48:31.022 Job finished. I csv_loader 274732139359754 2018-10-22 20:48:31.136 Loaded 1000 rows. D csv_loader 274732139359754 2018-10-22 20:48:31.139 Function execution took 3288 ms, finished with status: 'ok'

looks like the function ran as expected!

$ bq show csvtestdataset.csvtable Table rickts-dev-project:csvtestdataset.csvtable Last modified Schema Total Rows Total Bytes Expiration Time Partitioning Labels ----------------- ----------------------- ------------ ------------- ------------ ------------------- -------- 22 Oct 13:48:29 |- id: integer 1000 70950 |- first_name: string |- last_name: string |- email: string |- gender: string |- ip_address: string

great! there are now 1000 rows. looking good.

$ egrep '^[1,2,3],' testdata.csv 1,Andres,Berthot,[email protected],Male,104.204.241.0 2,Iris,Zwicker,[email protected],Female,61.87.224.4 3,Aime,Gladdis,[email protected],Female,29.55.250.191

with the first 3 rows of the bigquery table

$ bq query 'select * from csvtestdataset.csvtable \ where id IN (1,2,3)' Waiting on bqjob_r6a3239576845ac4d_000001669d987208_1 ... (0s) Current status: DONE +----+------------+-----------+---------------------------+--------+---------------+ | id | first_name | last_name | email | gender | ip_address | +----+------------+-----------+---------------------------+--------+---------------+ | 1 | Andres | Berthot | [email protected] | Male | 104.204.241.0 | | 2 | Iris | Zwicker | [email protected] | Female | 61.87.224.4 | | 3 | Aime | Gladdis | [email protected] | Female | 29.55.250.191 | +----+------------+-----------+---------------------------+--------+---------------+

and whaddyaknow, they match! w00t!

proof of concept: complete!

conclusion: cloud functions are pretty great.

lets say you have some servers in a cluster serving vhost foo.com and you want to put all the access logs from all the webservers for that vhost into Bigquery so you can perform analyses, or you just want all the access logs in one place.

in addition to having the raw weblog data, you also want to keep track of which webserver the hits were served by, and what the vhost (Host header) was.

so, foreach() server, we will install fluentd, configure it to tail the nginx access log, and upload everything to Bigquery for us.

it worked like a champ. here’s what i did to PoC:

fluentd

$ curl -L https://toolbelt.treasuredata.com/sh/install-ubuntu-xenial-td-agent3.sh | sh

$ bq mk --dataset rickts-dev-project:nginxweblogs Dataset 'rickts-dev-project:nginxweblogs' successfully created.

[

{

"name": "agent",

"type": "STRING"

},

{

"name": "code",

"type": "STRING"

},

{

"name": "host",

"type": "STRING"

},

{

"name": "method",

"type": "STRING"

},

{

"name": "path",

"type": "STRING"

},

{

"name": "referer",

"type": "STRING"

},

{

"name": "size",

"type": "INTEGER"

},

{

"name": "user",

"type": "STRING"

},

{

"name": "time",

"type": "INTEGER"

},

{

"name": "hostname",

"type": "STRING"

},

{

"name": "vhost",

"type": "STRING"

}

]

$ bq mk -t nginxweblogs.nginxweblogtable schema.json Table 'rickts-dev-project:nginxweblogs.nginxweblogtable' successfully created.

$ sudo /usr/sbin/td-agent-gem install fluent-plugin-bigquery --no-ri --no-rdoc -V

fluentd to read the nginx access log for this vhost and upload to Bigquery (while also adding the server hostname and vhost name) by creating an /etc/td-agent/td-agent.conf similar to this: https://gist.github.com/rickt/641e086d37ff7453b7ea202dc4266aa5 (unfortunately WordPress won’t render it properly, sorry)

You’ll note we are using the record_transformer fluentd filter plugin to transform the access log entries with the webserver hostname and webserver virtualhost name before injection into Bigquery.

fluentd runs as (td-agent by default) has read access to your nginx access logs, start (or restart) fluentd

$ sudo systemctl start td-agent.service

$ hostname hqvm $ curl http://localhost/index.html?text=helloworld you sent: "helloworld"

$ bq query 'SELECT * FROM nginxweblogs.nginxweblogtable WHERE path = "/index.html?text=helloworld"' +-------------+------+------+--------+-----------------------------+---------+------+------+------+----------+--------------------------+ | agent | code | host | method | path | referer | size | user | time | hostname | vhost | +-------------+------+------+--------+-----------------------------+---------+------+------+------+----------+--------------------------+ | curl/7.47.0 | 200 | ::1 | GET | /index.html?text=helloworld | - | 14 | - | NULL | hqvm | rickts-dev-box.fix8r.com | +-------------+------+------+--------+-----------------------------+---------+------+------+------+----------+--------------------------+

proof of concept: complete!

conclusion: pushing your web access logs into Bigquery is extremely easy, not to mention, a smart thing to do.

the benefits exponentially increase as your server + vhost count increases. try consolidating, compressing and analyzing logs from N+ servers using months of data in-house and you’ll see the benefits of Bigquery right away.

enjoy!

it’s been really hot in Los Angeles recently, and i realised i was switching back to my web browser from the Slack app to find out the current temperature downstairs an awful lot before leaving the office.

i realised that a /weather command would far more efficient. say hello to slack-weather-bot.

a simple golang backend that take a request for /weather?zip=NNNNN and then posts a quick one-liner of current conditions back to you on Slack. enjoy.

https://github.com/rickt/slack-weather-bot

| package slackweatherbot | |

| import ( | |

| owm "github.com/briandowns/openweathermap" | |

| "golang.org/x/net/context" | |

| "google.golang.org/appengine" | |

| "google.golang.org/appengine/log" | |

| "google.golang.org/appengine/urlfetch" | |

| "net/http" | |

| "os" | |

| "strconv" | |

| "text/template" | |

| ) | |

| const ( | |

| weatherTemplate = `It's currently {{.Main.Temp}} °F ({{range .Weather}} {{.Description}} {{end}}) ` | |

| ) | |

| // get the current weather conditions from openweather | |

| func getCurrent(zip int, units, lang string, ctx context.Context) *owm.CurrentWeatherData { | |

| // create a urlfetch http client because we're in appengine and can't use net/http default | |

| cl := urlfetch.Client(ctx) | |

| // establish connection to openweather API | |

| cc, err := owm.NewCurrent(units, lang, owm.WithHttpClient(cl)) | |

| if err != nil { | |

| log.Errorf(ctx, "ERROR handler() during owm.NewCurrent: %s", err) | |

| return nil | |

| } | |

| cc.CurrentByZip(zip, "US") | |

| return cc | |

| } | |

| // redirect requests to / to /weather | |

| func handler_redirect(w http.ResponseWriter, r *http.Request) { | |

| http.Redirect(w, r, "/weather", 302) | |

| } | |

| // handle requests to /weather | |

| // currently supports parameter of ?zip=NNNNNN or no zip parameter, in which case DEFAULT_ZIP is used | |

| func handler_weather(w http.ResponseWriter, r *http.Request) { | |

| // create an appengine context so we can log | |

| ctx := appengine.NewContext(r) | |

| // check the parameters | |

| zip := r.URL.Query().Get("zip") | |

| switch zip { | |

| // if no zip parameter given, get the DEFAULT_ZIP from our env vars | |

| case "": | |

| zip = os.Getenv("DEFAULT_ZIP") | |

| } | |

| // convert the zip string to an int because that's what openweather wants | |

| var zipint int | |

| zipint, err := strconv.Atoi(zip) | |

| if err != nil { | |

| log.Errorf(ctx, "ERROR handler_weather() zip conversion problem: %s", err) | |

| return | |

| } | |

| // get the current weather data | |

| wd := getCurrent(zipint, os.Getenv("UNITS"), os.Getenv("LANG"), ctx) | |

| // make the template | |

| tmpl, err := template.New("weather").Parse(weatherTemplate) | |

| if err != nil { | |

| log.Errorf(ctx, "ERROR handler_weather() during template.New: %s", err) | |

| return | |

| } | |

| // execute the template | |

| err = tmpl.Execute(w, wd) | |

| if err != nil { | |

| log.Errorf(ctx, "ERROR handler_weather() during template.Execute: %s", err) | |

| return | |

| } | |

| // we're done here | |

| return | |

| } | |

| // because we're in appengine, there is no main() | |

| func init() { | |

| http.HandleFunc("/", handler_redirect) | |

| http.HandleFunc("/weather", handler_weather) | |

| } | |

| // EOF |

are you curious if your [corporate] Slack users are logging into Slack using a Slack desktop or mobile app, or if they’re just using the Slack webpage? here’s some example code to call the slack team.accessLogs API to output which of your Slack users are not using a desktop or mobile Slack app.

https://github.com/rickt/golang-slack-tools/blob/master/slackaccessloglooker.go

stable release of Slack Translator Bot.

http://github.com/rickt/slack-translator-bot

what is Slack Translator Bot? the [as-is demo] code gets you get a couple of Slack /slash commands that let you translate from English to Japanese, and vice-versa.

below screenshot shows example response to a Slack user wanting to translate “the rain in spain falls mainly on the plane” by typing:

within slack:

TL;DR/HOW-TO

stable release of Slack Team Directory Bot.

http://github.com/rickt/slack-team-directory-bot

what is Slack Team Directory Bot? you get a Slack /slash command that lets you search your Slack Team Directory quick as a flash.

below screenshot shows example response to a Slack trying to find someone in your accounting department by typing:

within slack:

TL;DR/HOW-TO

http://gist-it.appspot.com/http://github.com/rickt/slack-team-directory-bot/blob/master/slackteamdirectorybot.go

rickt/slack-team-directory-bot

i’ve updated my example Golang code that authenticates with the Core Reporting API using service account OAuth2 (two-legged) authentication to use the newly updated golang.org/x/oauth2 library.

my previous post & full explanation of service account pre-reqs/setup:

http://code.rickt.org/post/142452087425/how-to-download-google-analytics-data-with-golangfull code:

have fun!

drop me a line or say hello on twitter if any questions.

stable release of my modified-for Google Appengine fork of https://github.com/bluele/slack. i have this working in production on several Appengine-hosted /slash commands & bots.

http://github.com/rickt/slack-appengine

the TL;DR on my modifications:

https://github.com/rickt/slack-appengine